Electro Optical (EO) sensors have been core payloads for aerial systems for years. Current sensor technology offers mature products not only in the visible (VIS) and near infrared (NIR) bands of the optical spectrum, but also in others such as long wave, medium wave and short wave infrared (LWIR, MWIR, SWIR). Moreover, advances in the miniaturization of such technology have resulted in small SWaP sensor alternatives that can be used on board medium sized and even small UAS. The possibility to easily obtain aerial imagery in different bands of the spectrum has enabled a rapid increase in the number of services and applications available based of the exploitation of such data, ranging from precision agriculture to ISR or aerial photography.

Despite all this, the use of EO sensors and systems on board UAS still involves a considerable amount of human supervision. Thus, typical scenarios involve either real time monitoring by an operator of a video feed as provided to a ground station, or offline manual analysis of data previously captured and stored on board the UAS. In order to fully exploit the potential of EO sensors, and build systems that minimize the need for manual intervention, thus enabling autonomous or at least semi-autonomous operations, progress in several areas is required. These include Intelligent Video Analytics, as well as the processing hardware that enables online implementation of such analysis on-board UAS.

Challenges in intelligent video analytics for UAS

In this context, Gradiant is building up on years of expertise in Intelligent Video Analytics to develop new techniques that adapt to advances in sensor technology, embrace new paradigms of image processing and machine learning, such as parallel processing and deep learning, and that are optimized for the low SWaP hardware available for on-board processing whenever this is required.

As part of this ongoing effort, Gradiant is facing numerous technical challenges, some of the most relevant of which are small target detection, and the exploitation of advances in Deep Neural Networks (DNN). Solutions to these challenges allow us to offer support for autonomous or semi-autonomous missions from UAS and for UAS in very demanding operational scenarios.

Small Target Detection

One of the most relevant challenges in Video Analytics for UAS related applications in general, and for Intelligence, Surveillance and Reconnaissance (ISR) in particular, is long range object detection. This challenge has prompted great efforts towards Dim Target Detection (low signal to clutter ratio) and Small Target Detection (typically less than 16×16 pixels) in the Video Analytics research community. In ISR applications with UAS involved, the problem of Small and Dim Target detection becomes even more challenging as missions usually take place in natural very dynamic scenarios. Thus, in maritime scenarios, it is usually necessary to model the surface of the water for the sensors considered, a very difficult task by itself. Moreover, other factors, such as sudden changes in illumination and perspective, and distractors of all kinds such as birds, debris and clouds, are common both at land and at sea.

All this considered, and despite the superior level of maturity of sensor technology in the visible range of the spectrum, usually providing better SNR and resolution, most work in Small and Dim Target detection has been focused on the use of thermal infrared (TIR) sensors[i]. The reason for this lies in the need to provide support in low visibility conditions, a common requirement in many ISR and non ISR applications involving UAS. At this point, it is worth pointing out how the challenges to image and video analysis vary considerably depending on the sensor and range of the optical spectrum considered, and therefore techniques conceived for TIR can usually not be extended to images in the visible range of the spectrum and vice versa.

For many years, the most common techniques used for small and dim target detection have been wavelets and contrast filtering. Recently, and number of alternative approaches have been proposed, amongst which the most relevant are:

The use of temporal filtering using HMM filter banks has been considered in several works[ii]. Alternatively, Zsedrovits Et al.[iii] propose a solution based on the following processing pipeline: contrast enhancement via center-surround filters, morphological filtering, adaptive thresholding, and tracking of detected objects. In other approaches[iv], the pipeline involves top-hat preprocessing followed by detection via fusion of several low level methods, including detection by tracking, contrast enhancement, Difference of Gaussian filtering (DoG), Fourier spectral whitening and inter-frame differencing. Apart from these approaches, a number of methods based on the use of visual saliency to identify visually relevant regions according to different perceptual dimension have been proposed[v],[vi]

Alternatively, a number of works can be found in the literature that use a different approach based on the detection of objects via classification. This often involves the application of binary classifiers, trained for a particular type of object, to a whole image or set of images using a sliding window. When the objects of interest, as it is the case in small target detection, are very low resolution, efforts have focused on learning sparse low level features for both the objects of interest and the backgrounds considered[vii],[viii].

A very relevant example of a strategy based on classification for detection of small UAS has been presented by Sapkota Et al.[ix]. In this work, the use of Histograms of Oriented Gradients (HOG) as low level features is proposed, together with an AdaBoost classifier and the use of tracking as a means of eliminating temporally inconsistent detections from previous frames. As an alternative, a spatio-temporal approach has also been proposed by Rozantsev Et al.[x]. Thus, instead of using a classifier on 2D spatial windows, 3D spatio-temporal windows are used following a sliding window scheme. As for the classifier, a spatio-temporal Convolutional Neural Network (3D CNN) algorithm is proposed. The results presented are considerable better than baseline algorithms relying on low level features and state of the art 2D classification. An average precision of 85% is achieved over a small test data-set including micro UAS and planes.

Exploitation of advances in DNN

Another very relevant challenge in which Gradiant is working is the exploitation of advances in DNNs. Beyond their use in classification problems[xi], Deep Neural Networks (DNNs) are more and more widely used to solve other Video Analytics related problems. Object detection, for example, a very common problem for UAS related scenarios (where detection involves localizing an object in an image as well as classifying it as belonging to one amongst several classes) can be approached by extending classification architectures. Thus, candidate regions can be generated, inside of which the probability of containing an object of interest is evaluated. This is the case for architectures such as YOLO[xii], Faster R-CNN[xiii], or SqueezeDet[xiv]. Amongst other applications of DNNs for Video Analytics in UAS related scenarios, image segmentation[xv], object tracking[xvi] and image super resolution[xvii] are particularly relevant.

The use of DNNs for Intelligent Video Analytics problems has great potential. However, there are a number of challenges that need to be faced in order to fully exploit such potential. Amongst these, the need for ways in which neural networks can be trained with relatively small data sets is particularly crucial, as the ideal almost infinite training data set is not usually available. This is particularly so in certain scenarios, where objects or events of interest and therefore training samples are rare and far between, and difficult or costly to obtain. Related to all the above, it is worth considering that the most common training strategies for DNNs are supervised, for which annotated or calibrated data is required. This is again a very important factor to consider in certain scenarios, as building an annotated dataset before hand is required. This might not be possible in ISR, for example, as the operational cost of obtaining such dataset is extremely high, and the nature of the objects of interest in often not known or can not be easily characterized.

Maritime surveillance and Counter UAS by Gradiant

Gradiant is working the challenges above and others, in order to provide solutions for Maritime surveillance and Counter UAS in different operational scenarios.

Maritime surveillance from UAS

Gradiant´s offering in Martime Surveillace from UAS includes solutions for detection of targets (ranging from small, in line with Small Target Detection challenge above, to a size that allows for visual identification), as well as for classification and tracking of vessels and other objects of interest, and includes support for both onboard and on the ground processing.

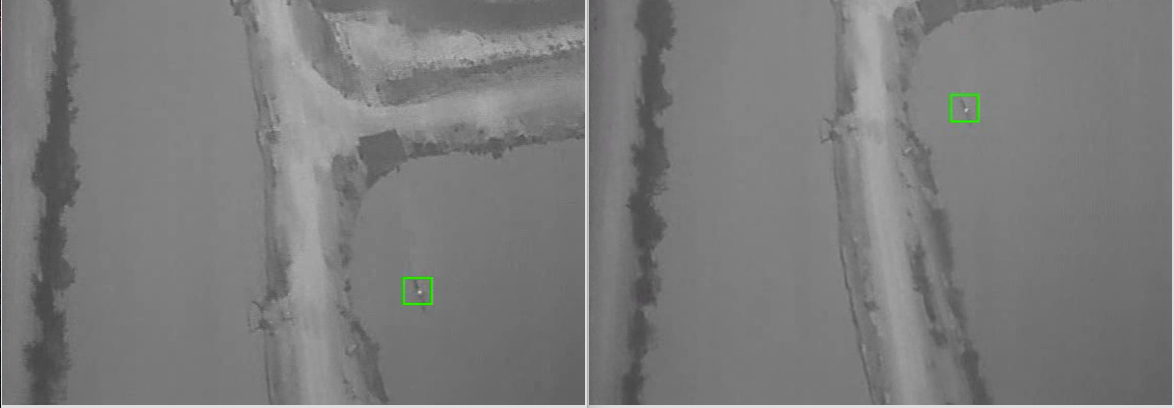

Figure 1 illustrates a surveillance scenario from UAS in a maritime environment. The figure shows two consecutive frames where a small boat is detected on the surface of the water. The UAS is equipped with a low cost low SWaP infrared LWIR sensor. As well as the water pools, there are areas of land with a considerable amount of clutter due to rocks and vegetation. The pools have a more homogeneous background, but the objects of interest, small boats and swimmers, are only a few pixels in size due to the configuration of the lenses and the flight altitude. The scenario therefore illustrates very well the challenges described in the sections above.

Figure 1: Small target detection on the surface of the water using a TIR sensor

Very good results have been obtained for the detection of intruders, small boats and swimmers, using techniques based on estimation of low level saliency. More particularly, techniques based on equalization in the frequency domain have proved to be a good option for very low resolution targets.

Beyond the small target detection challenge, this scenario presents a number of extra difficulties. Thus, segmentation of the water and land areas is required if only targets over the water are considered of interest. Moreover, tracking of objects once detected is particularly difficult as they only appear visible for a few frames due to the speed of the UAS, on fixed wing configuration in this case.

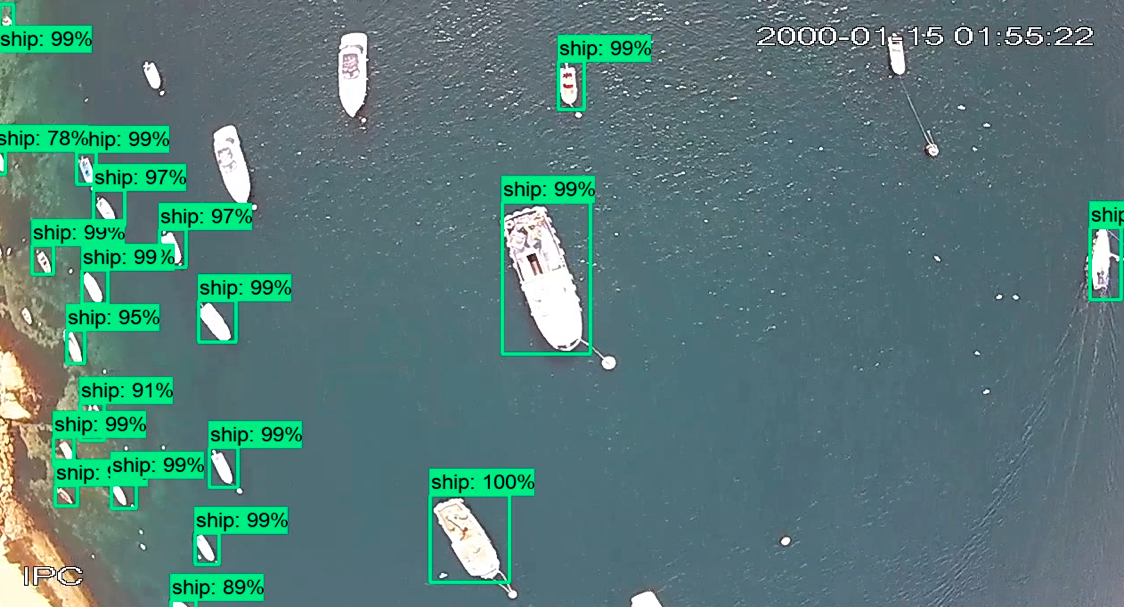

Figure 2 shows a different maritime scenario. In this case, a small UAV equipped with an RGB camera is used to automatically detect and localize vessels so that their position and type can be checked against available AIS (Maritime Automatic Identification System) data. On board Intelligent Video Analytics allow for well over 90% precision in automatic boat detection in real time from a small UAS. In the figure, two slightly different scenarios (one with a cenital view and very high quality images, and one with an oblique view and adverse weather conditions) are shown. The approach used in this case includes the use of DNN on its own (top), as well as combined with other low level detection methods (bottom).

Figure 2: Ship detection using DNN on board a UAS

Detection of drones

Gradiant´s Video Analytics solution for drone detection and Counter UAS is built around the idea of early detection, and offers drone vs non-drone automatic identification of targets and tracking. This type of application has been gaining great interest from the research community as it presents a number of technical challenges that include and extend the ones described above.

This, early in this context involves detection of UAS from as long a range as possible, and therefore detection of as small an object of interest in the image as possible. Moreover, there is an almost infinite variability of scenarios, lighting and weather conditions, etc. Thus, a drone over the line of the horizon can be relatively easy to segment. This situation may change within seconds as the target moves under the horizon where, depending on the background and weather conditions, it might turn almost invisible in any range of the optical spectrum. To add even more complexity, distractors such as birds and other elements in the scenarios considered can generate a considerable amount of false positives.

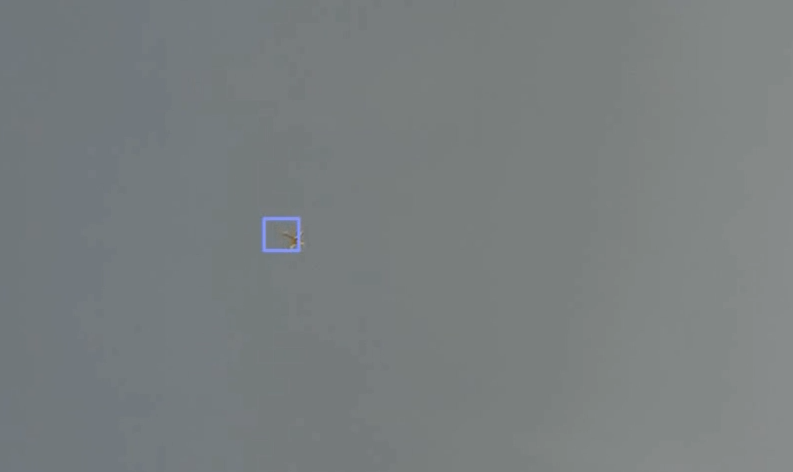

Gradiant´s approach to the problem is based on a combination of low level detection methods and DNNs. Figure 3 and Figure 4 illustrate the problem of drone detection, both at long range (very small, very low resolution) and short (small target) respectively over an unclutter background. Over the line of the horizon, the drone can be easily detected using saliency based detection techniques on a fixed camera, as the background is uniform. Similarly, on a sunny day with no wind, a background of vegetation is relatively stable, and detection using change detection is possible using a fixed camera.

Figure 3: Detecting an approaching small drone at long range over an uncluttered background of vegetation (no wind)

Figure 4: Detecting a drone over the line of the horizon on a uniform unclattered background

However, in typical operational scenarios, it is not often common to find such optimal weather conditions and uniform backgrounds, and detection on moving cameras is usually required. Figure 5 shows one such situation, where the camera is moving and the wind causes the background of vegetation to generate a large amount of clutter. In this case, DNN based detection networks prove to be a good solution by themselves, as well as when combined with some of the low level methods mentioned above. Similarly, Figure 6 shows drone detection over a highly dynamic background of water, where ripples, reflections and changes in illumination do not allow for change detection techniques to be applied.

Figure 5: Small drone detection over a dynamic background with a moving camera

Figure 6: Drone detection over a highly dynamic background

Conclusions

Gradiant´s Video Analytics team is building upon years of experience to provide operationally viable solutions to some of the most relevant challenges in video analysis for UAS scenarios. This is allowing the centre to provide support for a variety of autonomous or semi-autonomous UAS-related missions, including maritime surveillance and Counter UAS.

This work consolidates Gradiant as a relevant player in the security and surveillance sectors, and guarantees the centre´s position as a technology provider for the UAS market, which is expected to boom in the following years thanks to the definition of new products, services and operational scenarios.

References

[i] Survey on Dim Small Target Detection in Clutter Background: Wavelet, Inter-Frame and Filter Based algorithms. Procedia Engineering, Vol. 15, 2011, pp. 479-483

[ii] John Lai ; Jason J. Ford ; Peter O’Shea ; Luis Mejias. Vision-Based Estimation of Airborne Target Pseudobearing Rate using Hidden Markov Model Filters, IEEE Transactions on Aerospace and Electronic Systems

[iii] T. Zsedrovits, A. Zarandy, B. Pencz, A. Hiba, M. Nameth and B. Vanek, “Distant aircraft detection in sense-and-avoid on kilo-processor architectures,” 2015 European Conference on Circuit Theory and Design (ECCTD), Trondheim, 2015, pp. 1-4

[iv] M. Nasiri, M. R. Mosavi and S. Mirzakuchaki, Infrared dim small target detection with high reliability using saliency map fusion. in IET Image Processing, vol. 10, no. 7

[v] Qi S, Ming D, Ma J, Sun X, Tian J. Robust method for infrared small-target detection based on Boolean map visual theory. Applied Optics, 2014 Jun 20; 53(18):3929

[vi] A. Sobral, T. Bouwmans and E. h. ZahZah, “Double-constrained RPCA based on saliency maps for foreground detection in automated maritime surveillance,” 2015 12th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Karlsruhe, 2015, pp. 1-6.

[vii] Li Z-Z, Chen J, Hou Q, et al. Sparse Representation for Infrared Dim Target Detection via a Discriminative Over-Complete Dictionary Learned Online. Sensors, 14(6).

[viii] Li, Z. et al. Infrared small moving target detection algorithm based on joint spatio-temporal sparse recovery, Infrared Physics & Technology Volume 69:44–52.

[ix] K. R. Sapkota et al., “Vision-based Unmanned Aerial Vehicle detection and tracking for sense and avoid systems,” 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, 2016, pp. 1556-1561.

[x] A. Rozantsev, V. Lepetit and P. Fua, “Flying objects detection from a single moving camera,” 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, 2015, pp. 4128-4136.

[xi] Krizhevsky A, Sutskever I, Hinton G. ImageNet Classification with Deep Convolutional Neural Networks. Advances in Neural Information Processing Systems NIPS 25. 2012

[xii] Redmon J, Farhadi A. YOLO9000: Better, Faster, Stronger. arXiv preprint arXiv:1612.08242. 2016.

[xiii] Ren S, He K, Girshick R, Sun J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Advances in Neural Information Processing Systems NIPS 28. 2015; 91–99.

[xiv] Wu B, Iandola F, Peter J, Keutzer K. SqueezeDet: Unified, Small, Low Power Fully Convolutional Neural Networks for Real-Time Object Detection for Autonomous Driving. arXiv preprint arXiv:1612.01051. 2016.

[xv] Long J, Shelhamer E, Darrell T. Fully Convolutional Networks for Semantic Segmentation. The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015, pp. 3431-3440.

[xvi] Wang, Naiyan and Yeung, Dit-Yan. Learning a Deep Compact Image Representation for Visual Tracking. Advances in Neural Information Processing Systems NIPS 26. 2013.

[xvii] Dong C, Change C, Tang X. Accelerating the Super-Resolution Convolutional Neural Network. arXiv preprint arXiv:1608.00367. 2016.

Author: José A. Rodríguez Artolazábal, Head of Video Analytics in Multimodal Information Department at Gradiant