If you’re our follower in social media, you already know last week we took part at Big Data Spain 2018, one of the three landmark conferences in Europe on Big Data, Artificial Intelligence, Cloud technologies and Digital Transformation.

With more than 1600 attendees and more than 90 speakers for two days, this edition had 4 simultaneous presentation tracks on technology and business around Big Data. Our colleagues Rafael Martínez and Agustín Cañas brief us some of the keytrends in Big Data and Artificial Intelligence fields.

1.Free software is a must

Numerous companies whose benefits were developing proprietary and closed solutions, have understood that getting closer to free software world is necessary. In order to provide a clearer image of community and transparency, they can incorporate open source tools to their solutions (Kafka, Elastic, Redis, Avro, Spark, among others); or open some parts of their developments, for example, in the form of GitHub repositories. This approach coincides with what new companies in the sector like Databricks, Data Artisans, Confluent or Redis Labs are doing and what Alok Singh or Aljoscha Krettek have explained in their talks.

Gradiant collaborates in free software projects, as long as the property and privacy policies allow it. We are aware of the advantages of this model, so we also publish modules, libraries or interesting containers for the community.

2.Software engineering for Machine Learning applications

The development process of Machine Learning solutions is similar to software engineering processes, but also there are some big differences to make difficult the typical life-cycle stages of software products (requirements, analysis, design, implementation, testing, integration and deployment, maintenance…). As Joey Frazee from Databricks pointed out, “in Machine Learning we can and should consider the same stages but we always have to take into account the particularities in each case”. He concludes “same process, different descriptions”.

In this way, there is a trend to improve and simplify the process for machine learning product development and a general awareness in the community to start making processes closer to software engineering. It also aims to better define data science team needs and their integration and collaboration with the software engineering teams already presents in companies. We use intensive notebooks for data exploration and proof of concept in the implementation stage. But now that the basis has been established, we look for other alternatives to develop production-ready models. At the same time, in the deployment and testing stages, we give special importance to monitoring metrics that allow us to improve the quality of the model and the deployment. For this reason, we can highlight here the work related to one of the main frameworks for stateful processing on data flows: Apache Flink.

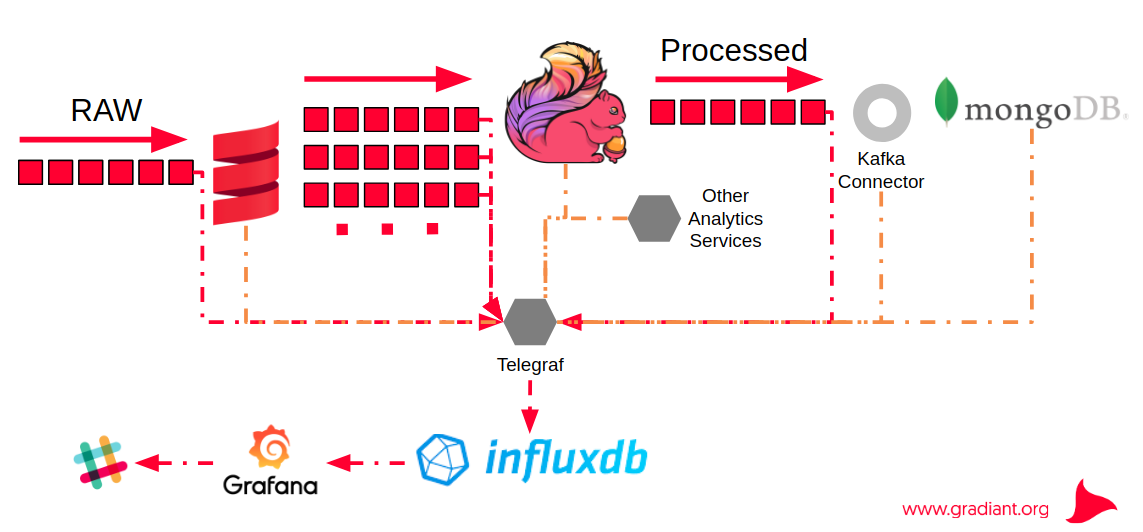

Figure. Monitoring for processes with Apache Flink. SIMPLIFY Project

3.Simplify the Machine Learning process

As we have already said, development of Machine Learning solutions is a complex process that requires to deal with challenges beyond the ones found in software development. Matei Zaharia, from Databricks, identified the main differences:

- In ML there are tools to cover the different phases of the process

- It’s hard to monitor the experiments

- It’s difficult to reproduce the results

- ML solutions are difficult to deploy

For this reason, it’s usual to quote slogans like “Writing ML code is not the difficult part of the life cycle of ML solutions”. There is a trend to use containers and orchestrators (Docker, Kubernetes) and to build platforms that simplify and support different stages of the life cycle (e.g. Kubeflow, Google; MLflow, Databricks; Mesosphere DC/OS, Mesosphere). Or they can facilitate the process with model export (e.g. with PFA – Portable Format for Analytics), pipeline construction (e.g. Nussknacker, Kubeflow Pipelines) or with simpler deployment strategies.

At Gradiant we have also worked on this line of process simplification and we intensively use Docker containers (Gradiant Docker repo) and orchestrators such as Kubernetes (both for ML applications and for other types of solutions). Examples of this work are the ITBox or HOSPITEC projects. On the other hand, we have experience with “integral” platforms. We would like to highlight the case of PNDA, a platform we contribute with, specifically in the network-pnda framework. This platform offers a light alternative with a minimum set of components. Although oriented to network analysis, the PNDA platform offers a scalable open source platform generic enough to be able to perform analysis in other Big Data contexts as well.

4.APIs ‘SQL-like’, upward trend

Providing SQL-type APIs is also becoming a valuable trend for Big Data streaming system developers because it simplifies the implementation of data pipelines that could be difficult and tedious. In this edition of Big Data Spain we have seen interesting examples for Flink and Kafka.

Apache Flink has incorporated the relational APIs Table API & SQL over the core APIs (DataSet and DataStream) with the aim of being a “great unification” where the processing engine adapts to the workflow with the transparent integration of batch and streaming data sources with the same syntax and semantics. In the case of KSQL (Streaming SQL for Apache Kafka) it is possible to make consults on a data stream and use the usual SQL methods (CREATE TABLE, SELECT, FROM, GROUP BY, JOIN, etc). This practically eliminates code programming. With this evolution, Confluent offers a new window to stream processing world that complements Kafka Streams and is mainly for engineers and data analysts.

The use cases of our most recent ML projects, have made Gradiant intensive users of this kindof APIs with different frameworks. We can point out the work with the KSQL interface in IRMAS project, Unidad Mixta de Investigación with Telefónica.

5.Network Databases

Graph-oriented databases continue to expand and consolidate their position for Big Data architectures where it is required to explore data with many-to-many relationships or navigate highly connected hierarchies. In these cases you first need to know what type of graph database you need:

- LPG (Labeled Property Graph): more generic and flexible, scalable and easy to navigate, although less interoperable and worse oriented to schemes. They are ideal for applying graph mining techniques. Examples of this type are Neo4j and TigerGraph.

- RDF (Resource Description Framework): suitable for the representation of semantic data structured in the form of triples, in general they offer lower performance. Examples of this type are AllegroGraph and GraphDB.

- Multi-model (document, key-value): also flexible although not optimized for graph analysis, and sometimes too linked to a particular company. Examples of this type are ArangoDB, Azure Cosmos DB or Amazon Neptune.

As Ian Robinson pointed out in his talk about Amazon Neptune, this type of database can be used for a lot of different use cases but nowadays it is common to find them to deal with social network analysis, recommendation engines, fraud detection or knowledge graphs. Gradiant has used this type of databases for the analysis of social networks (VIGIA and ATENEA projects).

Bonnus track

Machine Learning and Edge computing

Today we have a lot of connected smart devices. It is important to know how to complement the intelligence of these devices (edge intelligence) with the intelligence that can be obtained from the cloud (cloud intelligence). Precisely, the Internet of Things (IoT) is the convergence between edge computing, cloud computing and artificial intelligence. In this context, algorithms that dynamically decide when to invoke the cloud or the device and that allow predictions and inferences coming from both locations to be coordinated really help.

At Gradiant we have been working on IoT for a few years and we have focused our expertise on the industry and the primary sector. Currently, we have several open IoT and edge computing projects for this field.

ML ensembles

The ensembles merge different ML models to improve the algorithms’ predictive capability. They are used in supervised learning, mainly with classification algorithms: bagging (e.g. random forest) and boosting (e.g. gradient boosted trees) are two of the usual approaches. The idea is to combine weak learning models to achieve a strong learning model that provides a better global performance (to reduce generalization error and increase prediction accuracy). For the future, it is interesting to know that the same logic used in classifier ensembles can be applied to other techniques, like clustering or feature selection techniques.

At Gradiant we have been using these techniques for a long time. For example, the use of ensembles allowed us to obtain an outstanding result in the Kaggle competition for the Bosch challenge.

ML democratization

If we take the simplification of the process to extremes, Google is working on total automation to offer tools that allow you to create custom ML models with minimal effort and little or no advanced knowledge. Cloud AutoML, with its flavors AutoML Translation, Natural Language and Vision, are the first proof of this. In this framework of “democratization” is also important to mention its recent launch of the AI Hub, a “catalogue” to discover, share and deploy AI solutions (including end-to-end pipelines, Jupyter notebooks, Tensorflow modules and other resources).

Quantum computation

Quantum computing is at a very early stage, however it is evident that the field of AI and ML can be the big winners. Using qbits (multiple states) instead of bits (binary state) can greatly accelerate some of the operations performed by current computers and exponentially accelerate many of the algorithms currently used in ML. IBM has been working hard in this field for some years and at present the company already has quantum computers running by accessing with Qiskit, a modular open source framework for its programming.

Conferences

- Alok Singh, IBM CODAIT. How to build high performing weighted XGBoost ML model for real life imbalance dataset

- Aljoscha Krettek, Data Artisans. The Evolution of (Open Source) Data Processing

- Matei Zaharia, Databricks. MLflow: Accelerating the Machine Learning Lifecycle

- Manish Gupta, Redis Labs. Charting Zero Latency Future with Redis

- Paco Nathan, Derwen, Inc. AI Adoption in Enterprise

- Catherine Zhou, Codeacademy. Building a Data Science team from scratch

- Joey Frazee, Databricks. The lifecycle of machine learning & artificial intelligence

- Israel Herraiz, Google. Notebooks are not enough: how to deliver machine learning products without getting killed

- Holden Karau, Google. Big Data w/Python on Kubernetes (PySpark on K8s)

- Nick Pentreath, IBM CODAIT. Productionizing ML Pipelines with PFA

- Aljoscha Krettek, Data Artisans. The Evolution of (Open Source) Data Processing

- Kai Wähner, Confluent. KSQL – The Open Source SQL Streaming Engine for Apache Kafka

- George Anadiotis, Linked Data Orchestration. The year of the graph: do you really need a graph database?

- Ian Robinson, Amazon Web Services. Get Connected – Building Connected Data Applications with Amazon Neptune

- Pablo Peris, Carlos de Huerta, Microsoft. Machine Learning on The Edge

- Verónica Bolón-Canedo, University of A Coruña. Two heads are better than one: Ensemble methods for the win?

- Elisa Martín, IBM Spain. AI, central element of the Information Systems in the next decade

- Denis Jannot, Mesosphere. All you need to build secure ML pipelines and cloud native applications

- Maciek Próchniak, TouK. Stream processing for analysts with Flink and Nussknacker

Author: Carlos Giraldo Rodríguez, research engineer at Intelligent Systems department in Gradiant.