We are immersed in an environment in which we continually hear about Industry 4.0 and the application of technologies such as IoT or Artificial Intelligence to solve multiple problems and use cases that may arise in a manufacturing environment. Typical examples may be defect reduction and prescription of industrial process parameters, logistics optimization, or even end-user profiling and demand prediction. We know the end, and in many cases we are swept away by the technological waves of innovation to improve competitiveness through the application of these cutting-edge concepts, but do we know what means to use and do we do it properly? In order to respond to the needs that may arise at the different levels and departments of an industrial company, it is first necessary to have the appropriate tools for this.

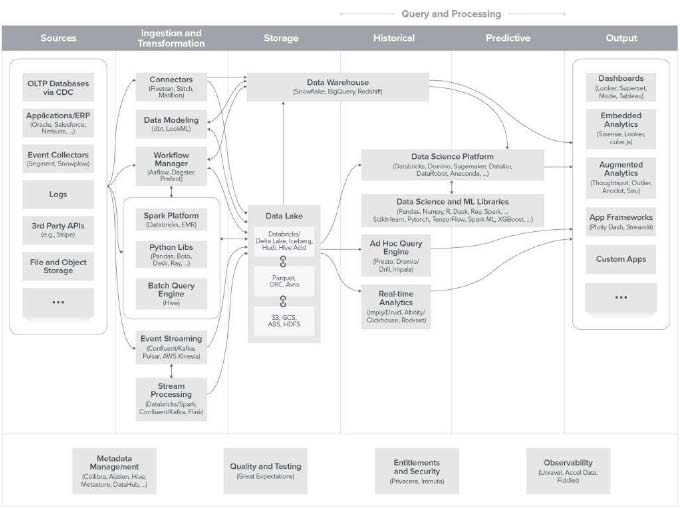

The emergence of these new decision support use cases makes it necessary to use analytics platforms that respond to the volume, speed and variety of data requirements that are produced in highly heterogeneous environments. In a generic way, we can say that these platforms are made up of various types of technologies for data ingestion, storage and analysis, supported by a layer of applications and business flow.

Figure: Examples of the various modules that can form a data processing architecture. Source: Emerging Architectures for Modern Data Infrastructure

Industry 4.0 success in ten steps

In view of the complexity involved in tackling a project of these characteristics due to the wide range of casuistry and technologies to be considered, within this post we intend to make our contribution, built on the accumulated experience in digitization both for industry –Congals4.0 with Congalsa; Sea2Table with Nueva Pescanova and Facendo 4.0 with Stellantis– and for other sectors.

These are our 10 tips for implementing a data analysis architecture for Industry:

- Join expert people. Undertaking a data capture and analysis project from scratch can be especially complex and overwhelming due to the vast technological offer that currently exists to meet different needs. The learning curve isn’t short either. Relying on a team of experts to develop a project of this type will result in lower costs and they will be able to advise you on which is the best technology according to the specific use case.

- Start by defining clear use cases. On many occasions it is common to start a project of these characteristics by approaching a complete monitoring of the entire plant and processes, without really focusing on where you want to go. This is a path with a high probability of failure. In the first place, you must build the specific use cases you want to address, set objectives and if it can be, business indicators that allow you to evaluate the progress made.

- Analyze the current process and improve it before digitizing it. Another common mistake lies in digitizing a process as it is today, with all its defects and waste. It is difficult to eliminate absolutely all inefficiencies in a process, but before transferring them to the digital world, it is convenient to detect, evaluate and eliminate them as far as possible.

- Include security from the beginning of the project. All data projects must follow an approach that applies the principles of Security & Privacy by Design from the beginning. That is, security and privacy should not be postponed until the project is advanced, since it is very likely that fixing some problems will then be more expensive than if they had been taken into account from the beginning.

- Test and make pilots in a production environment. Carrying out preliminary test pilots makes it possible to evaluate technologies, their suitability and how they should be deployed. It allows you to analyze how the solution will evolve in the long term and what are the best directions to maximize ROI. It is important to note that possible failures or changes in the initial conditions should not be interpreted as a failure but rather as part of the process towards a viable final solution.

- Establish a specific plan for integrating legacy data and devices. It is very difficult for a company to have a sufficient financial injection to update all its systems and machinery to the latest technologies and in any case it will be a change implemented gradually. Therefore, you need a plan that addresses how to integrate older devices and retrofit them for integration into a data processing architecture.

- The generation of analytical models is not immediate and has an associated time. Data collection is a time-consuming process and analysis will not be perfect right out of the box. The data patterns associated with anomalies and failures are more important than the normal operating states of the equipment, but more difficult to achieve as they are not situations that occur with great frequency. As a longer-term vision of how to ensure data quality and data collection integrity, IIoT projects must start early and have a systematic way of collecting and managing data to achieve useful analytical models.

- Train all staff in new technologies. It is of great importance that the company staff understands the technologies that are being used from the beginning of the project, both at the plant level, if applicable, and at the management level. The objective should be to help the workers of the company to do their tasks better and with greater value, not to make them irrelevant. The incorporation of extra sensors, indirect monitoring of variables should be considered, or even sometimes, being creative, it is possible to reduce the need to incorporate new data capture systems. At this point, the opinion of the staff is essential to decide what and how a process of these characteristics should be monitored.

- Maintain continuous contact with experts in the field of application. Expert personnel play a key role, especially in the initial phases of a data intelligence project. Domain knowledge is necessary to narrow the scope of monitoring to and interpret the most likely relevant data (what the data says about assets and processes).

- It is an ongoing process. Like any continuous improvement process in the company, data processing and its associated tools must be incorporated into this philosophy. The processes change and the associated models will have to be adjusted periodically, it is necessary to carry out software updates, new technologies emerge that can further optimize some steps… The PDCA (Plan-Do-Check-Act) cycle continues to be a perfectly valid and applicable approach in this situation.

Congals4.0 está cofinanciado por la Consellería de Economía, Emprego e Industria y la Agencia Gallega de Innovación -a través de la 2ª edición del programa Industrias do Futuro 4.0 – Fábrica Intelixente 2019-, y cuenta con el apoyo del Fondo Europeo de Desarrollo Regional (FEDER) en el marco del Programa Operativo FEDER Galicia 2014-2020.

Facendo 4.0 (Competitividad Industrial y Electromovilidad a través de la Innovación y la Transformación Digital) es el proyecto puesto en marcha por Stellantis Vigo, en el marco de la cuarta convocatoria de ayudas del Programa de la Fábrica Intelixente de la Xunta de Galicia, con el objetivo de contribuir a incrementar la competitividad y reforzar el tejido empresarial del Sector de Automoción de Galicia.

Sea2Table se enmarca en el programa ‘Fábrica del futuro, fábrica inteligente y sostenible de la industria 4.0’. Está subvencionado por la Axencia Galega de Innovación y apoyado por la Vicepresidencia Segunda y la Consellería de Economía, Empresa e Innovación de la Xunta de Galicia, y la ayuda está cofinanciada por el Fondo Europeo de Desarrollo Regional (FEDER) en el marco del Programa Operativo FEDER Galicia 2014-2020.